In this article, we will learn how to use Redis caching in ASP.Net Core Web API. Before starting this article please visit my previous article In-Memory Caching in ASP.NET Core-Basic guide of Caching where we discussed about Caching, In-Memory Caching in ASP.NET Core, and other concepts related to caching.

In this article, we will talk about Distributed Caching, Redis, Setting up Redis Caching in ASP.NET Core.

Prerequisites

– Basic knowledge of Caching & In-memory of Caching

– Visual Studio 2019/2022

– .NET Core SDK 5.0 ( You can use SDK 3.1/6.0 )

What is Distributed Caching ?

A distributed cache is a cache shared by multiple app servers, typically maintained as an external service to the app servers that access it. A distributed cache can improve the performance and scalability of an ASP.NET Core app, especially when the app is hosted by a cloud service or a server farm.

Like an in-memory cache, it can improve the ASP.Net Core application response time quite drastically. However, the implementation of the Distributed Cache is application-specific. This means that there are multiple cache providers that support distributed caches. A few of the popular ones are Redis and NCache.

Pros of Distributed Caching

A distributed cache has several advantages over other caching scenarios where cached data is stored on individual app servers. When cached data is distributed, the data:

- Is coherent (consistent) across requests to multiple servers.

- Multiple Applications / Servers can use one instance of Redis Server to cache data. This reduces the cost of maintenance in the longer run.

- The cache would not be lost on server restart and application deployment as the cache lives external to the application.

- It does not use the local server’s resources.

Cons of Distributed Caching

There are two main disadvantages of the distributed caching:

- The cache is slower to access because it is no longer held locally to each application instance.

- The requirement to implement a separate cache service might add complexity to the solution

What is Redis Caching ?

Redis(Remote DIctionary Server) is an open source, in-memory data structure store, used as a database, cache and message broker. It supports data structures such as strings, hashes, lists, sets, sorted sets with range queries, bitmaps, hyperloglogs, geospatial indexes with radius queries and streams.

It’s a NoSQL Database as well and is being used at tech giants like Stackoverflow, Flickr, Github, and so on. Redis is a great option for implementing a highly available cache to reduce the data access latency and improve the application response time. As a result, we can reduce the load off our database to a very good extent.

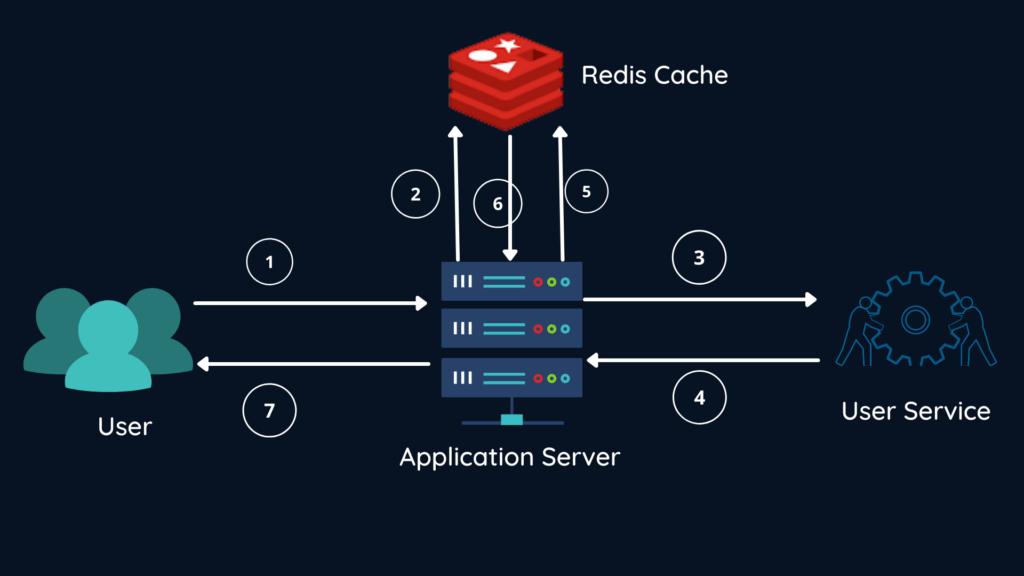

Let’s understand in a better way of Redis cache,

- User requests a user object.

- App server checks if we already have a user in the cache and return the object if present.

- App server makes a HTTP call to retrieve the list of users.

- Users service returns the users list to the app server.

- App server sends the users list to the distributed (Redis) cache.

- App server gets the cached version until it expires (TTL).

- User gets the cached user object.

IDistributedCache Interface

ASP.NET Core provides IDistributedCache interface to interact with the distributed caches including Redis.. IDistributedCache Interface provides you with the following methods to perform actions on the actual cache.

- GetAsync – Gets the Value from the Cache Server based on the passed key.

- SetAsync – Accepts a key and Value and sets it to the Cache server

- RefreshAsync – Resets the Sliding Expiration Timer (more about this later in the article) if any.

- RemoveAsync – Deletes the cache data based on the key.

How to setup Redis in a Windows Machine

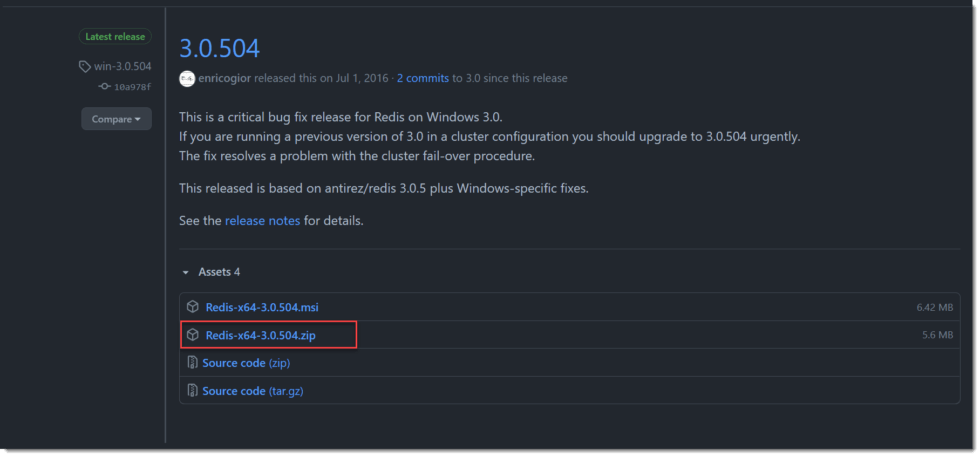

To setup Redis in a windows machine, There is Redis open source Github Repo, You could also use the MSI Executable file.

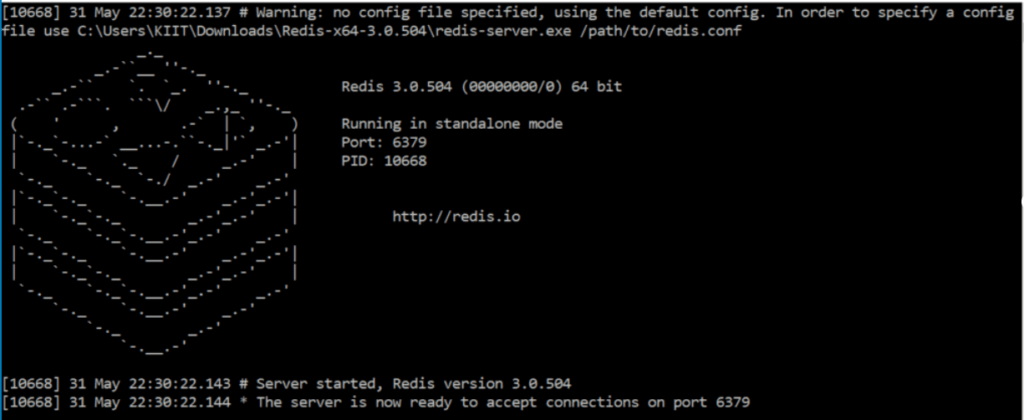

Extract the highlighted zip folder and open redis-server.exe file.

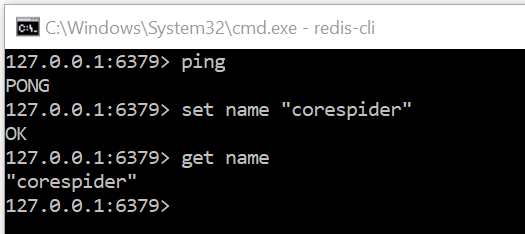

Now, the Redis server is up and running. We can test it using the redis-cli command.

Open a new command prompt and run redis-cli on it and try the following commands:

Now that we installed the Redis-server and saw that it is working properly, we can modify the API to use Redis-based distributed caching.

Redis CLI Commands

Setting a Cache Entry -> Set name "corespider" OK Getting the cache entry -> Get name "corespider" Deleting a Cache Entry -> Get name "corespider" -> Del name (integer)1

Integrating Redis Cache In ASP.NET Core

In the previous example of In-Memory Caching in ASP.NET Core we created the ASP.NET Core Web API project. This API is connected to DB via Entity Framework Core and return the customer list.

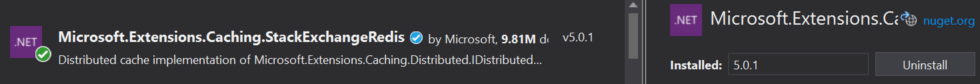

Install the below package using Nuget Package manager console OR you can install package using Nuget search

Install-Package Microsoft.Extensions.Caching.StackExchangeRedis

To support the Redis cache let’s configure the cache in startup.cs/ConfigureServices method.

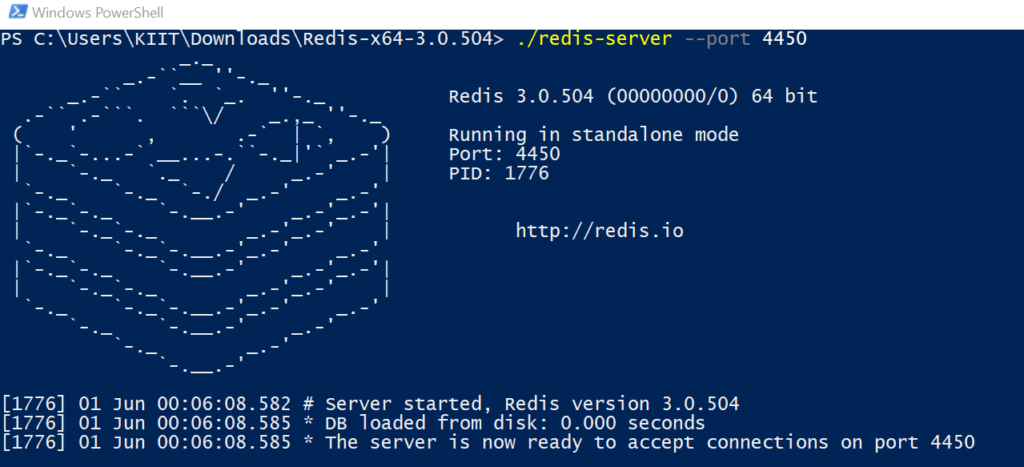

By default, Redis runs on the local 6379 port. To change this, open up Powershell and run the following command.

./redis-server --port {your_port}

services.AddStackExchangeRedisCache(options =>

{

options.Configuration = "localhost:4450";

});Implement Redis Cache memory in Controller

private readonly ApplicationDbContext _context;

private readonly IMemoryCache memoryCache;

private readonly IDistributedCache distributedCache;

public CustomersController(ApplicationDbContext context, IMemoryCache memoryCache, IDistributedCache distributedCache)

{

_context = context;

this.memoryCache = memoryCache;

this.distributedCache = distributedCache;

}

// GET: api/Customers

[HttpGet]

public async Task<ActionResult<IEnumerable<Customer>>> GetCustomers()

{

var cacheKey = "Allcustomer";

string serializedCustomerList;

var customerList = new List<Customer>();

var redisCustomerList = await distributedCache.GetAsync(cacheKey);

if (redisCustomerList != null)

{

serializedCustomerList = Encoding.UTF8.GetString(redisCustomerList);

customerList = JsonConvert.DeserializeObject<List<Customer>>(serializedCustomerList);

}

else

{

customerList = await _context.Customers.ToListAsync();

serializedCustomerList = JsonConvert.SerializeObject(customerList);

redisCustomerList = Encoding.UTF8.GetBytes(serializedCustomerList);

var options = new DistributedCacheEntryOptions()

.SetAbsoluteExpiration(DateTime.Now.AddMinutes(10))

.SetSlidingExpiration(TimeSpan.FromMinutes(2));

await distributedCache.SetAsync(cacheKey, redisCustomerList, options);

}

return customerList;

}- Line #4-8: We use the required constructor in the controllers.

- Line #14: We set the key internally in the code.

- Line #17: Access the distributed cache object to get data from Redis using the key “AllCustomer”

- Line #18 -23: If the key has a value in Redis, then convert it to a list of Customers and send back the data. If the value does not exist in Redis, then access the database via efcore, get the data and set it to redis.

- Line #25-31: The data will be stored in Redis as a byte array. We will be converting this array of a string. Converts the string to an object of type List.

SlidingExpiration: Gets or sets how long a cache entry can be inactive (e.g. not accessed) before it will be removed. This will not extend the entry lifetime beyond the absolute expiration (if set).AbsoluteExpiration:Gets or sets an absolute expiration date for the cache entry.

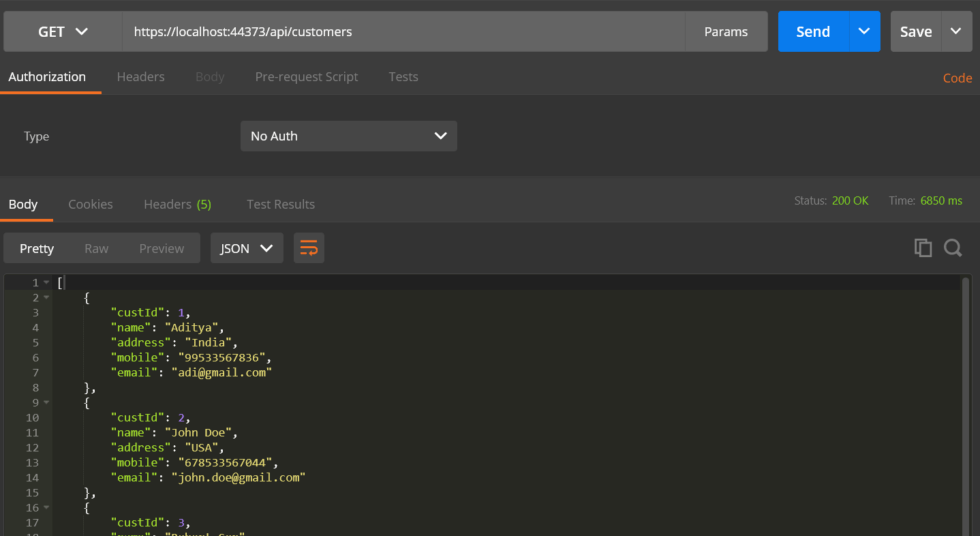

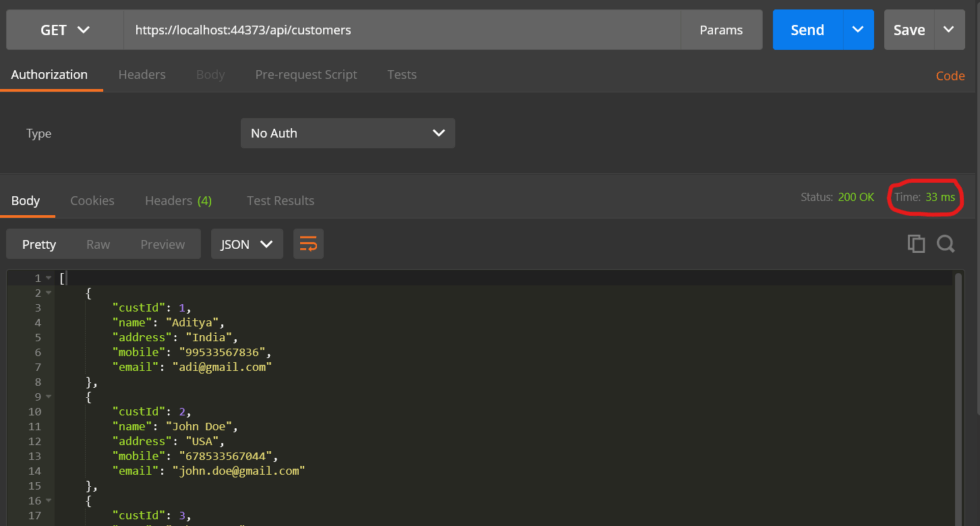

Test the Redis Cache in Postman

Let’s open the postman tool and test the cache performance.

In the first API call took about 6850 ms to return a response. During the first call the cache for customerList also would be set by our API onto the Redis server. Thus, the second call would be direct to the Redis cache, which should reduce the response time significantly. Let’s see that only takes 33 ms.

How to conditionally inject an IDistributedCache implementation of MemoryDistributedCache during local development?

You are using the key-value caching functionality of Redis. You are coding an Azure function (could be an ASP.NET Core Web app) and you want to develop and debug the code. Do you need to install Redis locally? Not neccessary. Through a couple of lines of clever DI, you can “fool” your classes to use the MemoryDistributedCache implementation of the interface IDistributedCache.

In this snippet we are checking for the the value of an environment variable and conditionally injecting the desired implementation of IDistributedCache

[TestMethod]

public void Condition_Injection_Of_IDistributedCache()

{

var builder = new ConfigurationBuilder();

builder.AddJsonFile("settings.json", optional: false);

Config = builder.Build();

ServiceCollection coll = new ServiceCollection();

if (System.Environment.GetEnvironmentVariable("localdebug") == "1")

{

coll.AddDistributedMemoryCache();

}

else

{

coll.AddStackExchangeRedisCache(options =>

{

string server = Config["redis-server"];

string port = Config["redis-port"];

string cnstring = $"{server}:{port}";

options.Configuration = cnstring;

});

}

var provider = coll.BuildServiceProvider();

var cache = provider.GetService<IDistributedCache>();

}How do we benchmark the performance of Redis cache?

The primary motivation for using a distributed cache is to make the application perform better. In most scenarios the central data storage becomes the bottleneck. Benchmarking the distributed cache gives us an idea of how much latency and throughput to expect from the cache for various document sizes.

Conclusion

Leave behind your valuable queries and suggestions in the comment section below. Also, if you think this article helps you, do not forget to share this with your developer community. Happy Coding 🙂

Related Articles

- .NET 8 Authentication with Identity in a Web API using Bearer Tokens and Cookies

- How to convert Text To Speech With Azure Cognitive Services using Angular and .Net Core

- CRUD operation using the repository pattern with .Net 8, Ef-Core, and MySQL

- How to use Response Compression in .NET Core

- How to Integrate GraphQL in .Net Core

Jayant Tripathy

Coder, Blogger, YouTuberA passionate developer keep focus on learning and working on new technology.